Facial recognition is so last week.

If this Russian tech company has its way, emotion-reading recognition is the cool kid on the block right now. With serious consequences for everyone’s privacy and personal data.

NTechLab ignited a controversy last year after it released FindFace, an app that can track everyone on VKontakte, the Russian equivalent of Twitter, based on their profile.

Someone used the app to identify and harass sex workers and porn actresses through their personal profiles, though the firm said that they weren’t breaking any privacy regulations.

But the new version of FindFace takes it even further. It adds emotion recognition to the traditional identification of face, age, and gender.

FindFace’s neural network receives part of a photo with a face, processes it, and generates the feature-vector, basically the set of 160 numbers that describe the face.

NTechLab claims its software is able to search through a database of a billion faces in less than half a second, allowing law enforcement real-time crime fighting abilities.

In terms of accuracy, the company’s performance in the 2015 MegaFace competition, when it beat Google and Beijing university with a 73% accuracy with a database of 1 million pictures, is already legendary.

Now, the algorithm boasts a 93% accuracy rate when it comes to identifying a face in a database of 10,000.

Like other companies in the facial recognition environment, NTechLab uses a deep learning approach for its system. And it seems to be working just fine.

“We’ve built a product with exceptional functionality and applied it not only to faces or gender, but also emotions. The idea is that we teach emotions to our system in the laboratory. We train the algorithm to understand the kind of emotion they see on the picture and then correct it the way you would teach a kid,” NTechLab’s CEO, Mikhail Ivanov, told Mashable.

While the identity of NTechLab’s clients is shrouded in secrecy, it’s been reported that the company has been working with Moscow city government to add the software to the capital’s 150,000 CCTV cameras.

There is also a lot of buzz around the firm in Russia as it’s being reported that their tech was being used to monitor crowds at FIFA’s Confederations Cup in June.

Ivanov was adamant to explain the firm doesn’t directly work with law enforcement, but it merely develops the solution for the public safety vertical.

Russian authorities likely used the public online version of Findface, so it’s hard to predict whether they’ll be using it for the World Cup next year. What is known is that NTechLab has more than 2,000 customers in countries including the UK, U.S., China — where it’s planning to open offices in 2017 — as well as Australia and India.

Besides public safety, NTechLab sees other possible applications in dating, security, banking, retail, entertainment, and events.

Companies could use it to analyse employees’ productivity and cross-reference it with their happiness levels.

“You can use it to track the level of service on your customers, to understand the behaviour of the guy you’re going to hire based on his emotional reactions during a job interview, to grasp the emotions of a crowd during a concert, their emotional temperature,” Ivanov said.

“Behavioural analytics could combine face recognition and big data for customer behaviour analysis in hotels and casinos.”

It could also be used by companies to analyse productivity among the employees during the week and cross-reference it with their happiness levels, Ivanov added.

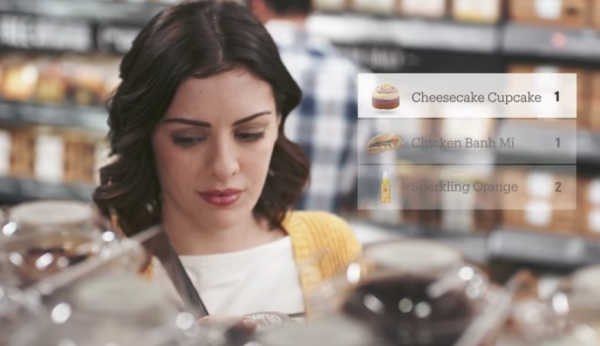

Facial recognition is the buzzword of the moment — from Amazon scanning faces in its grocery store to police using the software on 117 million Americans — albeit with privacy concerns over how the data is collected and used.

There are also major issues around biases in the way law enforcement has been conducted across the world.

“In many Western countries, including the U.S., the UK, and France, people of colour are more likely to be stopped and searched,” said Eva Blum-Dumontet, research officer with advocacy group Privacy International.

“Research conducted on algorithms used for predictive policing and by the U.S. court system has shown the algorithms tend to reproduce these biases. What would be the impact for emotional recognition? For instance emotions aren’t necessarily expressed the same way in every culture, will that affect the likeliness of being identified as suspicious?”

“We should be worried about living in a society where we are becoming suspects and not citizens,” Blum-Dumontet added.

But NTechLab claims facial recognition technology should be implemented everywhere not only to serve you, but also to keep you safe.

“We don’t believe in privacy. We live in a world of CCTV cameras everywhere.”

“We don’t believe in privacy,” Ivanov said. “We live in a world of CCTV cameras everywhere and internet communications. The concept of privacy applied to the world of our grandfathers, not our world.”

Facial recognition should be integrated in CCTV for public safety, Ivanov added, calling the use of facial recognition “a modern way to keep us safe.”

Ivanov also called for a public debate over regulating the technology.

“It’s like with guns. The use of technology cannot be restricted, it should be regulated.”

The technology has been in use in the UK for a while now, even though the first arrest attributed to it is from June this year.

In 2015, Leicestershire police scanned the faces of 90,000 festival-goers at Download Festival, checking them against a list of wanted criminals across the country. It was the first time anywhere in the UK that facial recognition technology — NeoFace — was used at a public outdoor event.

Privacy campaigners — and Muse frontman Matt Bellamy — expressed their fury at authorities after they casually mentioned the use of the surveillance project on Police Oracle,a police news and information website.

Police didn’t use any other method to warn festival-goers about the controversial initiative.

If FindFace gets its way, they won’t even need to mention it.